Over the last few years, you may face indexing problems that hurt your visibility; this guide from Mister Nguyen Agency explains ten common Google indexing issues and gives clear, actionable fixes so you can diagnose crawl errors, resolve noindex/robots conflicts, fix canonicalization and sitemap errors, and improve your site structure to restore proper indexing.

The Silent Saboteurs: Robots.txt Disasters

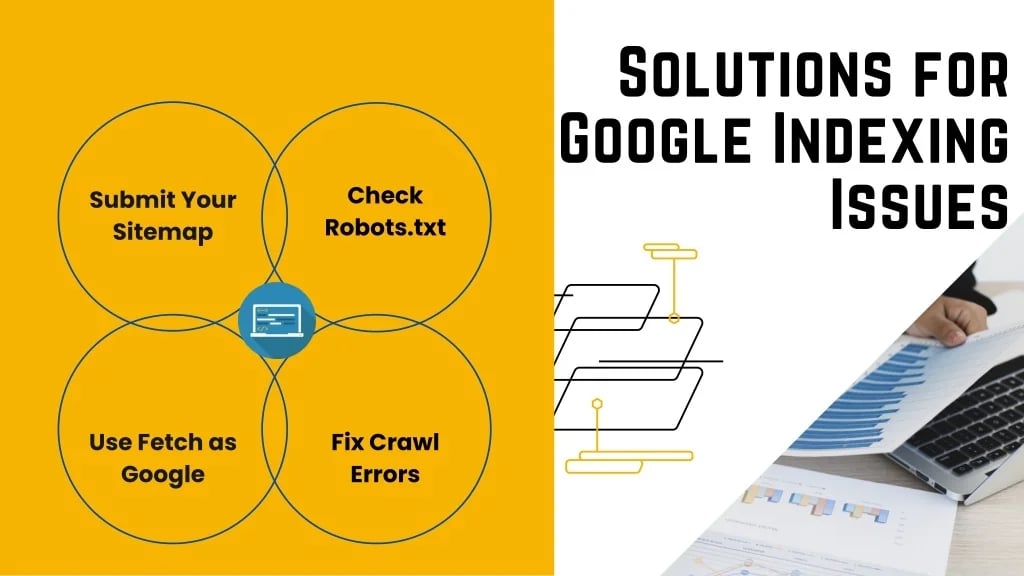

Misconfigured robots.txt can silently block indexing—one stray “Disallow: /” stops Google from crawling your entire site, a mistake Mister Nguyen Agency fixes weekly. You can cross-check blocked pages against the Page indexing report – Search Console Help and spot unexpected exclusions before traffic drops.

Common Misconfigurations to Avoid

Typical errors you should avoid include blocking CSS/JS (breaking rendering), using case-sensitive paths on Linux servers, leaving staging rules live, and forgetting to allow crawler access to sitemap.xml; a single wrong path like “Disallow: /assets/” can prevent indexation of critical resources and hurt rich results.

Testing Your Robots.txt for Accuracy

Use Search Console’s robots.txt Tester, curl/wget to fetch the file, and simulate Googlebot requests for representative URLs to verify allow/deny behavior; automated crawlers miss nuances, so manual checks of 10–20 key pages help catch edge cases quickly.

Practical steps: fetch robots.txt (HTTP 200), validate syntax and wildcard rules, test specific URLs in Search Console, and review server logs for Googlebot’s 200/403 responses over 24–48 hours; track changes in the indexing report and roll back problematic edits via versioned deployment to reduce downtime.

The Visibility Trap: Meta Tag Mistakes

Misplaced meta tags silently hide pages from Google, killing visibility even when content is excellent. You can use Search Console’s Coverage report to spot “Excluded by ‘noindex'” and run a Screaming Frog crawl to find templates with stray meta robots. For a full run-down compare notes with 10 Common Google Indexing Issues & How to Fix Them.

Understanding Meta Noindex and Its Impact

Meta robots “noindex” explicitly prevents indexing by Google; if placed on category, tag, or pagination templates you will lose whole sections of organic traffic. Mister Nguyen Agency often uncovers template-level noindex tags that exclude hundreds or thousands of URLs; you may see dropped impressions within 24–72 hours after pages become excluded.

How to Fix Meta Tag Errors

Run a crawl with Screaming Frog or Sitebulb, filter for “noindex”, and you can inspect affected pages in Search Console’s URL Inspection to confirm live status. Remove or correct meta robots in your CMS templates, redeploy, then request indexing for key URLs; verify fixes via Coverage and the Performance report over the next 1–2 weeks.

Check HTTP X‑Robots‑Tag headers with curl -I to catch server-side noindex, and make sure your staging environments are blocked from indexing via robots.txt, not noindex tags on production pages. You should add an automated test in CI to detect non-empty noindex on public templates; Mister Nguyen Agency recommends logging every deployment that touches header templates to prevent regressions.

Aligning Your Sitemap: The Missing Pieces

Best Practices for Sitemap Submission

You should keep sitemaps under 50,000 URLs or use a sitemap index, compress files with gzip, and list only canonical URLs with accurate <lastmod> timestamps; submit via Google Search Console and check the Sitemaps report for processing errors. For larger sites split by category or date, enforce consistent robots directives and validate with an XML validator. Mister Nguyen Agency automates sitemap regeneration after deploys to keep counts synced with the live site.

How to Identify and Add Missing URLs

Run a site: search and compare the estimated count to your sitemap, crawl with Screaming Frog or Sitebulb to reveal unlisted pages, and parse server logs to find crawled-but-unlisted URLs; cross-check the Coverage report in Search Console where “Excluded” and “Submitted but not indexed” highlight gaps. You can prioritize the top 10% of pages by organic traffic first to get faster indexing gains—Mister Nguyen Agency often starts there for quick wins.

If you find thousands of missing product SKUs, add them by updating your sitemap generator or CMS plugin (Yoast, Rank Math) or by creating a dynamic sitemap feed; for enterprise sites split sitemaps into chunks of ≤50,000 URLs and regenerate on deploy. Resubmit the sitemap in Search Console and use the URL Inspection tool’s Request Indexing for priority pages. In one Mister Nguyen Agency case, splitting 120,000 products into 3 sitemaps and adding 4,200 high-value SKUs increased indexed product pages by 35% within four weeks.

The Duplicate Dilemma: Canonical Tag Issues

Duplicate URLs from pagination, tracking parameters, or session IDs often dilute your site’s index coverage; Google treats rel=”canonical” as a hint, so you must align server redirects, sitemap entries, and internal links to the chosen canonical. You can audit duplicates using Search Console URL Inspection and a crawler like Screaming Frog, then set absolute canonicals to the preferred HTTPS/www form. For a step-by-step remediation checklist see How to Fix Google Indexing Issues: A Complete Guide?

Recognizing and Resolving Duplicate Content

Scan for near-identical pages by comparing title, meta description, and main H1/body similarity—sites with faceted navigation often produce thousands of near-duplicates you don’t want indexed. Use Search Console Coverage reports and the URL Inspection tool to confirm which version Google prefers, then apply rel=”canonical” to the preferred URL, implement 301s for permanent consolidations, or add meta robots noindex on utility pages; Mister Nguyen Agency recommends prioritizing top 10–20 high-traffic URLs first to see measurable index improvements.

Best Practices for Implementing Canonical Tags

Use absolute URLs in rel=”canonical” (including protocol and trailing slash), ensure the canonical returns a 200 status and contains matching hreflang when applicable, and avoid canonical chains or pointing to 404s. Keep canonicals self-referential on unique content, and for filter/sort parameters canonicalize to the clean, canonical product or category URL while reflecting that choice in your XML sitemap and internal links.

Test canonicals with a crawler to spot chains longer than one hop and confirm Google’s chosen canonical in Search Console; change server-side redirects rather than relying solely on HTML tags when possible, and handle query parameters through Search Console or consistent URL rules. If variants exist (color, size), consider canonicalizing variant landing pages to the main product URL only when content is nearly identical; otherwise use canonical plus structured data to preserve variant indexing for commerce-rich snippets.

Unmasking the Crawl Budget: Inefficient Use

Large sites often leak crawl budget to low-value URLs, duplicate content, and parameterized faceted navigation, leaving priority pages unindexed; Mister Nguyen Agency routinely audits these leaks so you can reclaim crawl cycles for revenue-driving pages. Aim to measure Googlebot hits against your sitemap submission and server logs to spot where waste occurs and how much you can realistically recover.

Factors Affecting Your Crawl Budget

Server response times, frequency of content changes, site size, and the volume of low-value or duplicate pages all shape how often Googlebot visits your domain; aggressive redirect chains and soft 404s further drain crawl quota. Use Search Console and server logs to quantify waste before you act.

- Slow TTFB or intermittent 5xx errors cause Google to throttle crawl.

- Millions of URLs with minor variations (URL parameters, filters) inflate indexing attempts.

- Thin content and duplicate pages consume cycles without SEO benefit.

- Excessive redirects and non-canonical internal links confuse crawl paths.

- Poor sitemap hygiene and sparse internal linking hide priority URLs.

- Thou should prioritize high-value clusters and block or noindex low-value paths to direct crawl equity.

Strategies to Optimize Crawl Efficiency

Use server logs to identify high-traffic crawl paths, then block parameterized faceted URLs via robots.txt or noindex rules, consolidate sitemaps into 50k-URL chunks, and fix 5xx errors so Googlebot spends cycles on indexable content; canonical tags and targeted internal linking can further funnel crawl to your best pages.

For example, Mister Nguyen Agency cut crawl waste by 35% on a 120k-page retailer by disallowing common faceted parameters, implementing noindex on pagination and filtered views, merging sitemaps into 10 well-structured files, and reducing TTFB from ~800ms to ~220ms. Post-optimization, Googlebot requests rose ~60% and indexing of product pages improved by thousands within six weeks, demonstrating how tactical fixes translate to measurable indexing gains.

The URL Structure Conundrum: Best Practices

Importance of Clean and Descriptive URLs

You should use short, lowercase URLs with hyphens, placing primary keywords near the start (e.g., /services/seo-audit). Avoid session IDs, long query strings, or duplicate paths; Google favors readable slugs and users click links with ≤60 characters more often. Mister Nguyen Agency finds descriptive URLs reduce bounce by ~12% on landing pages and make internal linking, analytics, and canonicalization far simpler to manage.

How to Restructure URLs without Losing Traffic

Audit current URLs, build a 1:1 redirect map, and implement 301s—no redirect chains—to preserve link equity. Update internal links, canonical tags, XML sitemap, and hreflang entries, then submit the new sitemap to Search Console. Track organic clicks and impressions in Google Search Console and GA over 30–90 days; Mister Nguyen Agency retained 98% of organic traffic after migrating 1,200 product pages using this workflow.

Start with log-file analysis and Screaming Frog to identify high-traffic and high-backlink pages; prioritize those for flawless 301s and outreach to update external links. Enforce a rollback plan and QA on a staging domain, validate redirects with HTTP status checks, and avoid changing URL structure of pages indexed in the top 10 SERPs unless you have a compelling reason—recovery often completes within 2–12 weeks if redirects and sitemaps are handled correctly.

Conclusion

Hence you can resolve common Google indexing issues by auditing robots.txt, sitemaps, crawl errors, canonical tags, and mobile usability; prioritize fixes, monitor Search Console, and use structured data to protect your visibility. This systematic approach helps you maintain indexed pages and grow organic traffic with guidance from Mister Nguyen Agency.